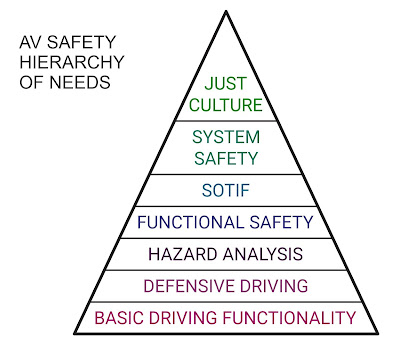

I've been on a personal journey to understand what safety really means for autonomous vehicles. As part of this I repeatedly find myself in conversations in which participants have wildly different notions of what it means to be "safe." Here is an attempt to put some structure around the discussion:

An inspiration for this idea is Maslow's famous hierarchy of needs. The idea is that organizations developing autonomous vehicles have to take care of the lower levels before they might be able to afford at higher levels. For example, if your vehicle crashes every 100 meters because it struggles to detect obstacles in ideal conditions, worrying about nuances of lifecycle support won't get you your next funding round.

To succeed as a viable at-scale company, you need to address all the levels in the AV maturity hierarchy. But in reality companies will likely climb the levels like rungs in a ladder. To draw the parallel to Maslow's needs hierarchy, if a company is starving for cash to run its operations, they're going to care more about getting the next demo or funding milestone compared to lifecycle safety considerations. That will only change when venture funding bakes higher levels of this safety maturity hierarchy into their milestones.

- Basic driving functionality: the vehicle works in a defined environment without hitting any objects or other road users on the defined scale of a funding milestone demo. When people say that their vehicle is safe because it has high crash safety ratings, that aligns with this bin. (I personally prefer my safety to happen without the part where the vehicle crashes.)

- Defensive driving: vehicle has expert driving skills, actively avoiding driving situations that present increased risk. This is analogous to sending a human driver to defensive driving school. At some point the automated driver becomes expert in terms of being able to drive in failure-free situations.

- Systematic hazard analysis: engineering effort has been spent analyzing and mitigating risks not just from driving functions, but also potential technical malfunctions, forced exits from the intended operational design domain, etc. (For example, HARA from ISO 26262.) Common hazards that aren't easy or inexpensive to mitigate might well be pushed onto the driver (e.g., incomplete redundancy to handle component failure, or required driver intervention to mitigate risks).

- Functional safety: analysis and redundancy have been added, and a principled approach (e.g., based on safety integrity levels) has been taken to ensure risks from technical faults in the system have been mitigated (e.g., ISO 26262 conformance).

- Safety of the Intended Function (SOTIF): ensuring "unknowns" have been addressed, dealing with environmental influences (e.g., not all radar pings will be returned), closing gaps in requirements, and accounting for aspects of machine learning. (e.g., ISO 21448 conformance).

- System level safety: accounting for things beyond just the driving task, including lifecycle considerations. Ensuring that hazard analysis and mitigation extends to process aspects, and a safety case has been used to ensure acceptable safety. (e.g., ANSI/UL 4600)

Cybersecurity needs to be addressed to achieve system safety, but should not wait to get started until reaching this level. - Just Safety Culture: operating and continuously improving the organization and execution of other levels of the hierarchy according to Just Culture principles rather than blame.

Specific common anti-patterns for Just Culture relevant to autonomous vehicles are: - Using humans as Moral Crumple Zones (blaming humans so as to evade addressing technical or process failures)

- Using propaganda and regulatory lobbying to deflect liability away from companies, reducing pressure to achieve acceptable safety.

As with the Maslow hierarchy the levels are not exclusive. Rather all levels need to operate concurrently, with the highest concurrently active level indicating progress toward safety maturity.

You might see this differently, see some things I've missed, etc. Comments welcome!

Summarizing a few comments so far:

- Where is cybersecurity? It is there, but doesn't feel like there is a certain layer below which you ignore and above which you need to have 100% covered. I've seen it done before safety, or barely done at all. Commonly both (security for IT infrastructure side, insecurity for vehicle side). It might be a parallel track on the side.

- Socio-technical aspects. I'm thinking perhaps these go between system safety and Just Culture. (In a sense it's system-of-systems safety if the other systems are non-technical systems.) This will need to include not only road systems and infrastructure (both of which UL 4600 includes in "system safety"), but also regulatory systems, insurance, and so on.

- Whether functional safety should be at a lower level (because OEMs typical do that sooner, but in my experience non-OEMs don't, so it might simply be layer order changes depending on history)